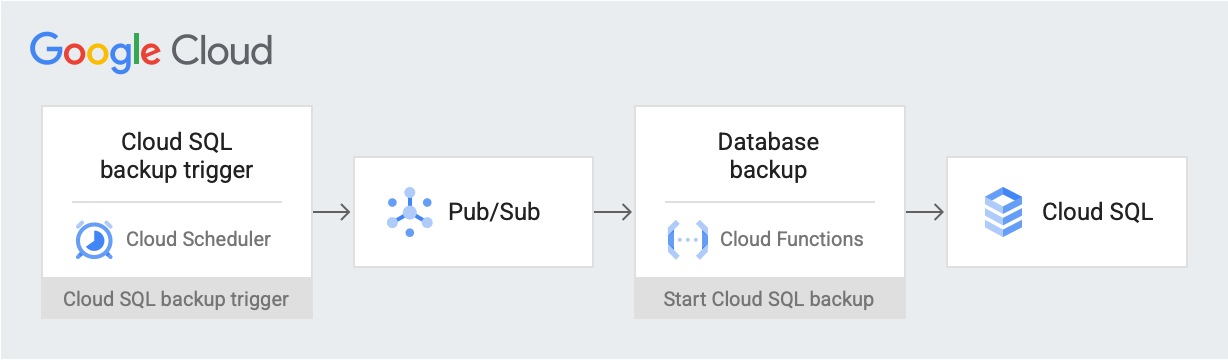

Schedule Cloud SQL backups

Goal:

Use Cloud Scheduler and Cloud Functions to schedule manual backups for a Cloud SQL database.

使用 Cloud Scheduler 和 Cloud Functions 為 Cloud SQL 資料庫安排手動備份。

Steps:

- Create a Cloud SQL for the PostgreSQL database instance and import the test database from the Cloud Storage bucket into the instance.

建立一個 Cloud SQL for PostgreSQL 資料庫實例,並將測試資料庫從 Cloud Storage 存儲桶匯入到此實例中。 - Create a Cloud Scheduler job that posts a backup trigger message at a scheduled date and time on a Pub/Sub topic. The message contains information about the Cloud SQL Admin API to start a database backup on Cloud SQL

建立一個 Cloud Scheduler 工作,以預定日期和時間在 Pub/Sub 主題上發布備份觸發訊息。 該訊息包含有關 Cloud SQL 管理員 API 的資訊,以便在 Cloud SQL 上啟動數據庫備份。

GCP Components:

- Cloud Storage: Stores the test databases that you import into Cloud SQL.

- Cloud SQL instance: Contains the database to backup.

- Cloud Scheduler: Posts messages to a Pub/Sub topic on a set schedule.

- Pub/Sub: Contains messages sent from the Cloud Scheduler.

- Cloud Functions: Subscribes to the Pub/Sub topic and makes an API call to the Cloud SQL instance to initiate the backup when triggered.

APIs:

- Cloud SQL Admin API

- Cloud Functions API

- Cloud Scheduler API

- Cloud Build API

- App Engine Admin API

Set up the environment:

In this step, configure your environment and create custom roles that have the permissions.

在這個步驟中,請配置您的環境並創建具有權限的自定義角色。

- Configure the following environment variables in Cloud Shell:

在 Cloud Shell 中配置以下環境變數

export PROJECT_ID=`gcloud config get-value project` export DEMO="sql-backup-tutorial" export BUCKET_NAME=${USER}-postgresql-$(date +%s) export SQL_INSTANCE="${DEMO}-sql" export GCF_NAME="${DEMO}-gcf" export PUBSUB_TOPIC="${DEMO}-topic" export SCHEDULER_JOB="${DEMO}-job" export SQL_ROLE="sqlBackupCreator" export STORAGE_ROLE="simpleStorageRole" export REGION="us-west2"

2. Create two custom roles that have only the permissions.

建立兩個自訂角色,僅具有權限。

gcloud iam roles create ${STORAGE_ROLE} --project ${PROJECT_ID} \

--title "Simple Storage role" \

--description "Grant permissions to view and create objects in Cloud Storage" \

--permissions "storage.objects.create,storage.objects.get"gcloud iam roles create ${SQL_ROLE} --project ${PROJECT_ID} \

--title "SQL Backup role" \

--description "Grant permissions to backup data from a Cloud SQL instance" \

--permissions "cloudsql.backupRuns.create"Create a Cloud SQL instance:

In this step, create a Cloud Storage bucket and a Cloud SQL for the PostgreSQL instance. Then upload the test database to the Cloud Storage and import the database from there into the Cloud SQL instance.

在這個步驟中,請建立一個 Cloud Storage 存儲桶和一個 PostgreSQL 的 Cloud SQL 實例。然後將測試數據庫上傳到 Cloud Storage 中,再從那裡導入數據庫到 Cloud SQL 實例中。

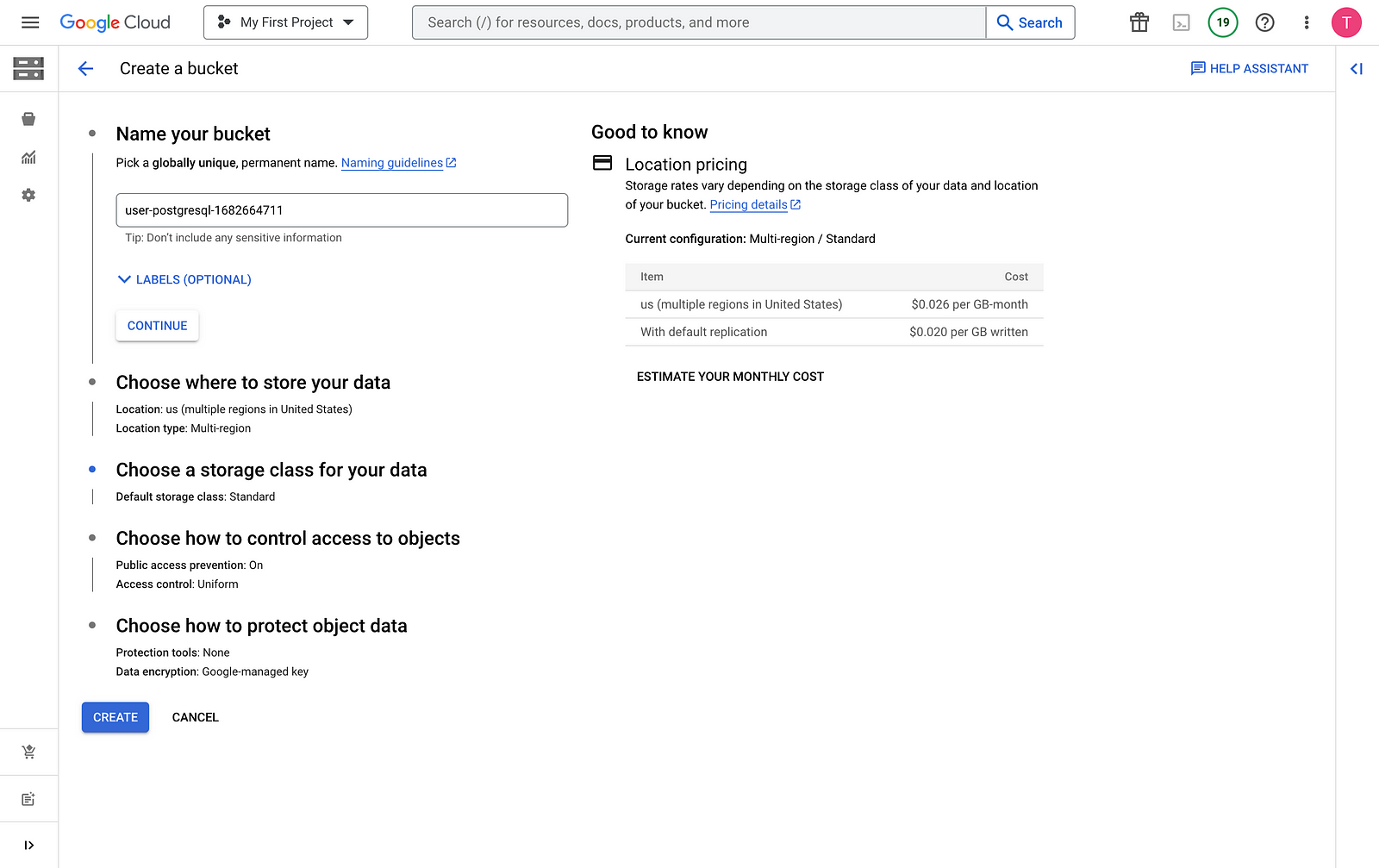

Create a Cloud Storage Bucket

建立 GCS

GCP Cloud Shell:

gsutil mb -l ${REGION} gs://${BUCKET_NAME}

GCP Console:

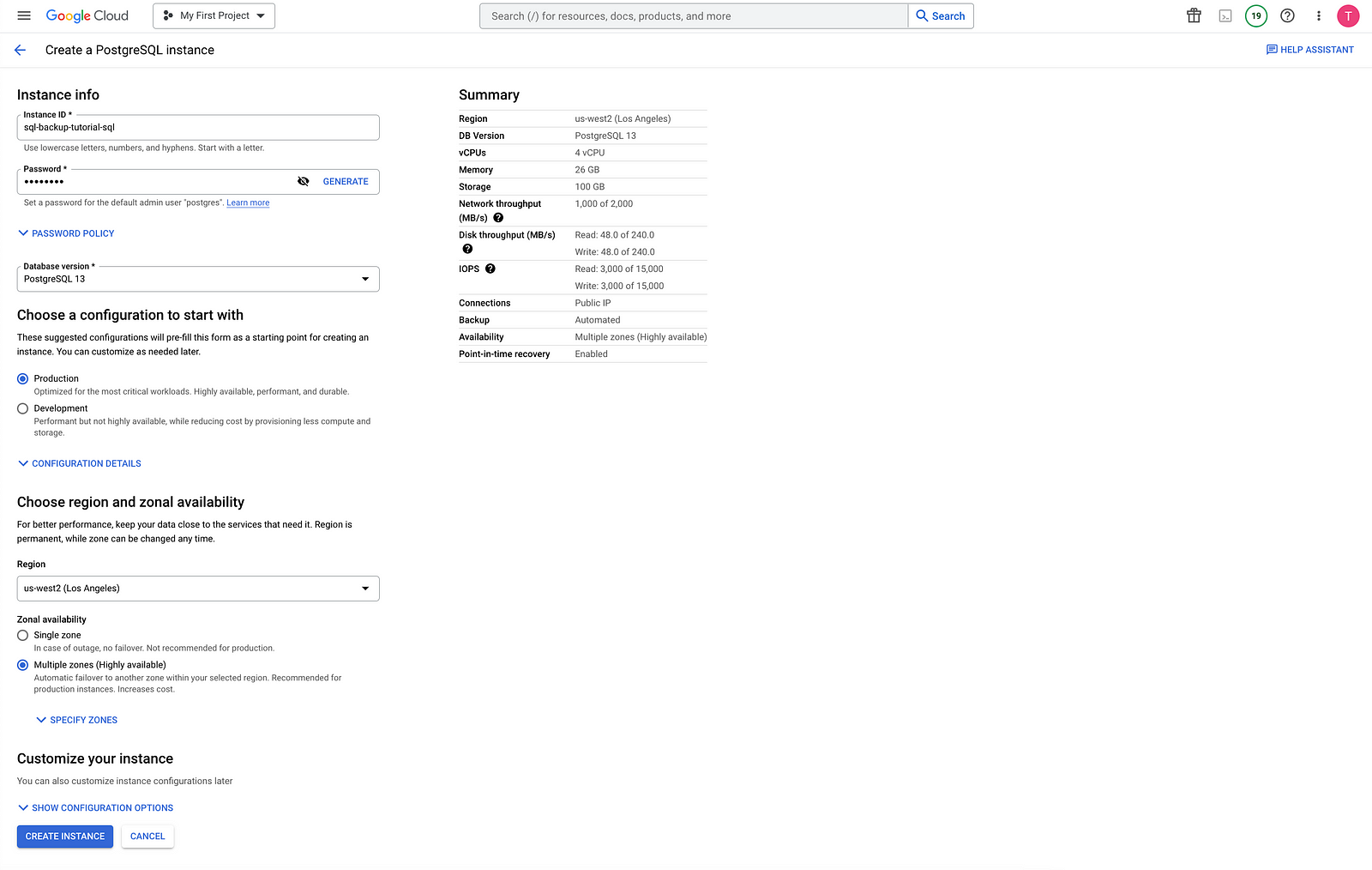

Create a Cloud SQL instance and grant permissions to its service account

建立一個 Cloud SQL 實例並授權其服務帳戶

GCP Cloud Shell:

1. Create a Cloud SQL for the PostgreSQL instance

1. 建立一個 PostgreSQL 的 Cloud SQL 實例。

sh gcloud sql instances create ${SQL_INSTANCE} --database-version POSTGRES_13 --region ${REGION}2. Verify that the Cloud SQL instance is running

2. 驗證 Cloud SQL 實例是否正在運行

gcloud sql instances list --filter name=${SQL_INSTANCE}3. Grant your Cloud SQL service account the permission to export data to Cloud Storage with the Simple Storage

3. 授予您的 Cloud SQL 服務帳戶使用 Simple Storage 將數據匯出到 Cloud Storage 的權限。

export SQL_SA=(`gcloud sql instances describe ${SQL_INSTANCE} \

--project ${PROJECT_ID} \

--format "value(serviceAccountEmailAddress)"`)gsutil iam ch serviceAccount:${SQL_SA}:projects/${PROJECT_ID}/roles/${STORAGE_ROLE} gs://${BUCKET_NAME}

GCP Console:

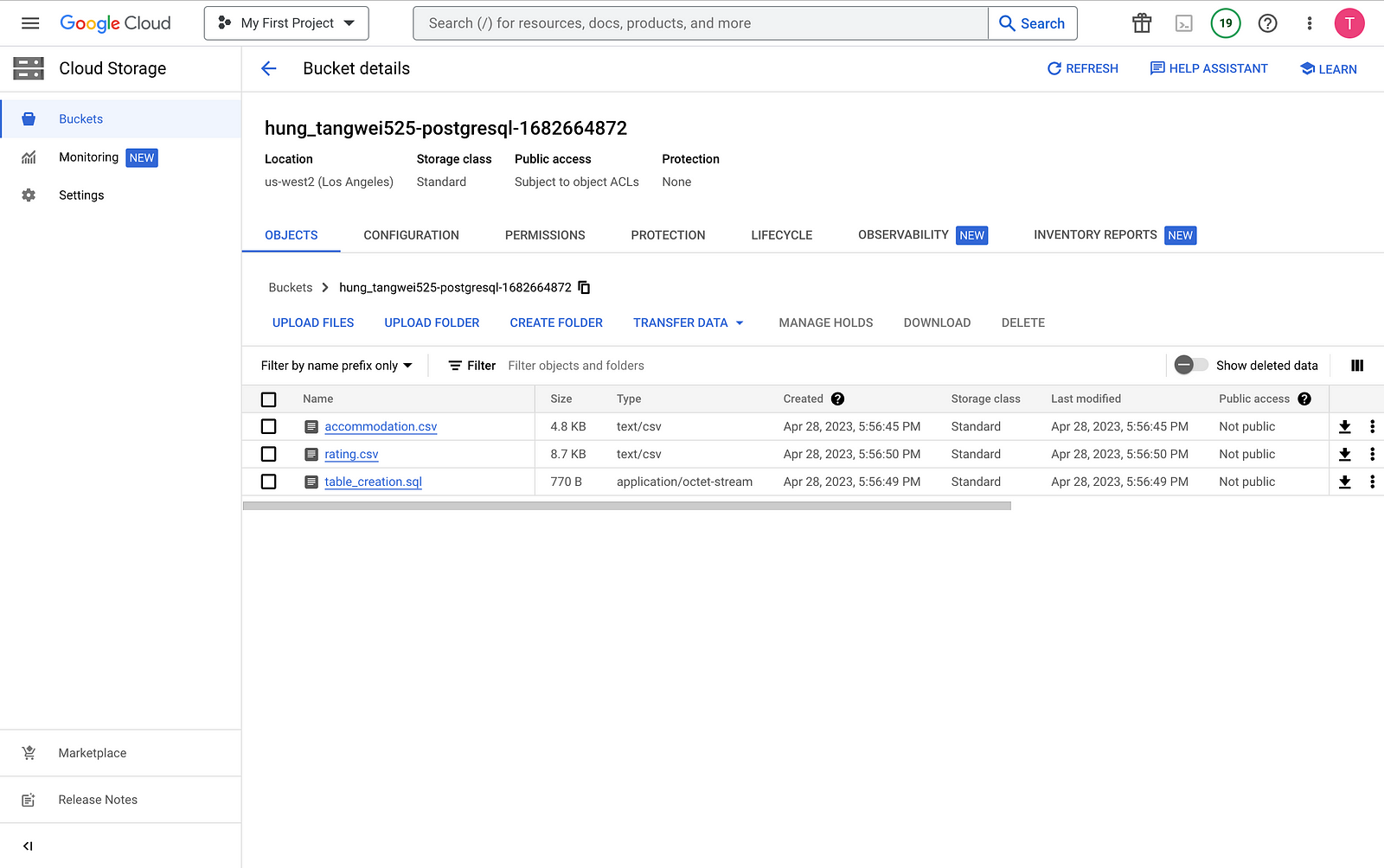

Populate the Cloud SQL instance with sample data

GCP Cloud Shell:

- Upload the files to your new bucket

將檔案上傳至您的新存儲桶。

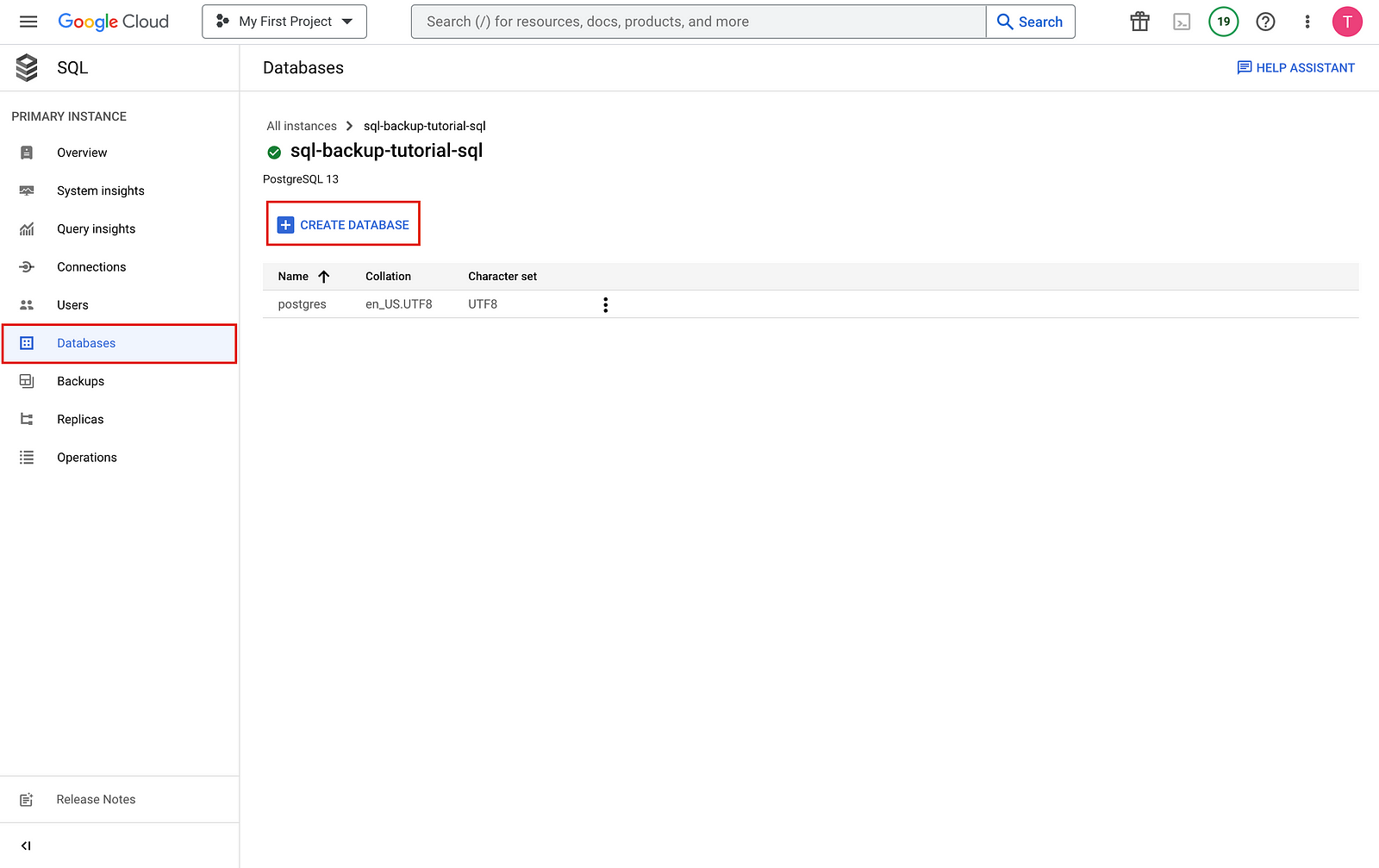

gsutil cp * gs://${BUCKET_NAME}2. Create a sample database

建立一個樣本資料庫

gcloud sql import sql ${SQL_INSTANCE} gs://${BUCKET_NAME}/table_creation.sql --project ${PROJECT_ID}3. Populate the database

填充資料庫

gcloud sql import csv ${SQL_INSTANCE} gs://${BUCKET_NAME}/accommodation.csv \

--database recommendation_spark \

--table Accommodationgcloud sql import csv ${SQL_INSTANCE} gs://${BUCKET_NAME}/rating.csv \

--database recommendation_spark \

--table RatingGCP Console:

- Upload the files to your new bucket

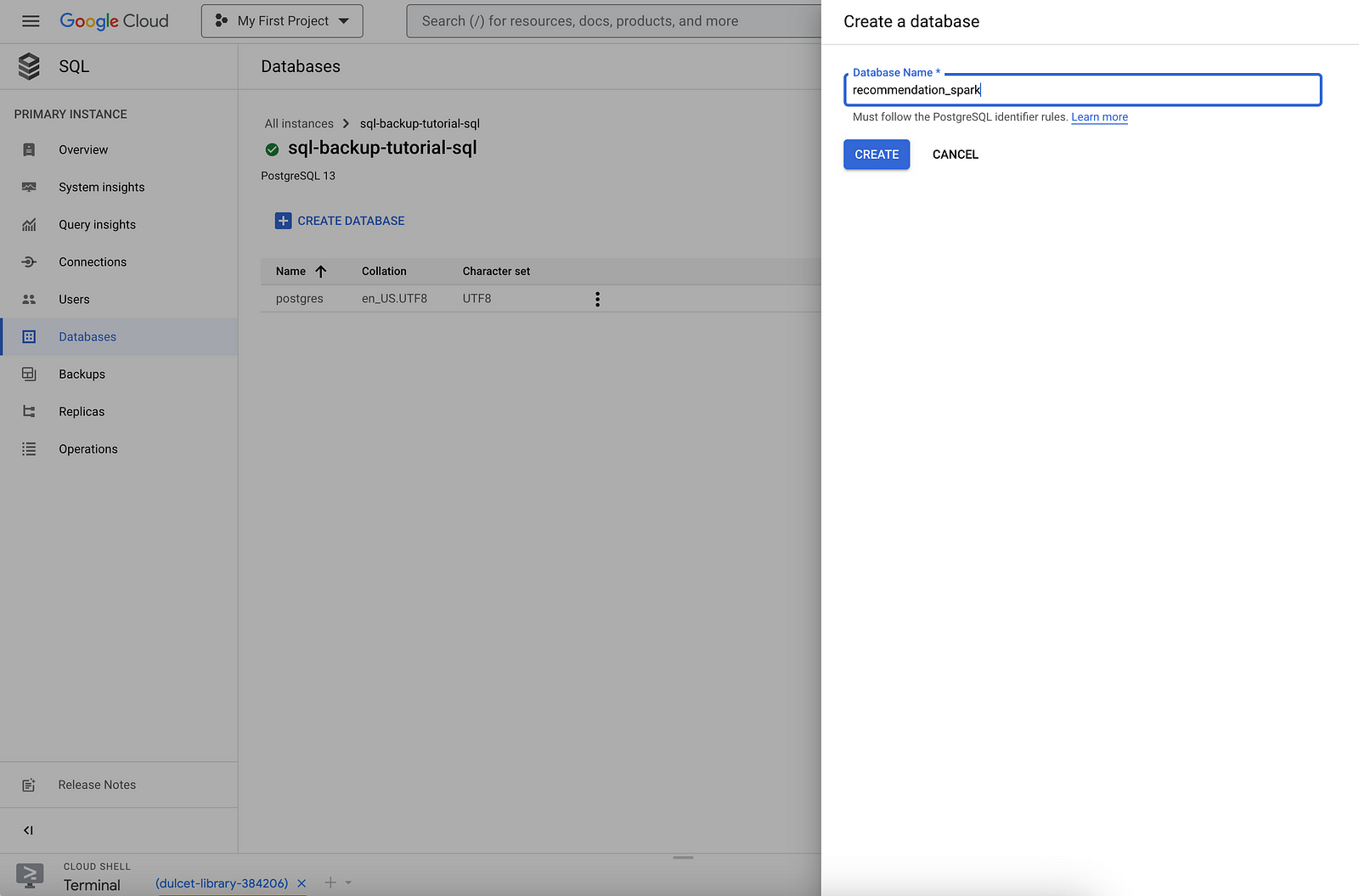

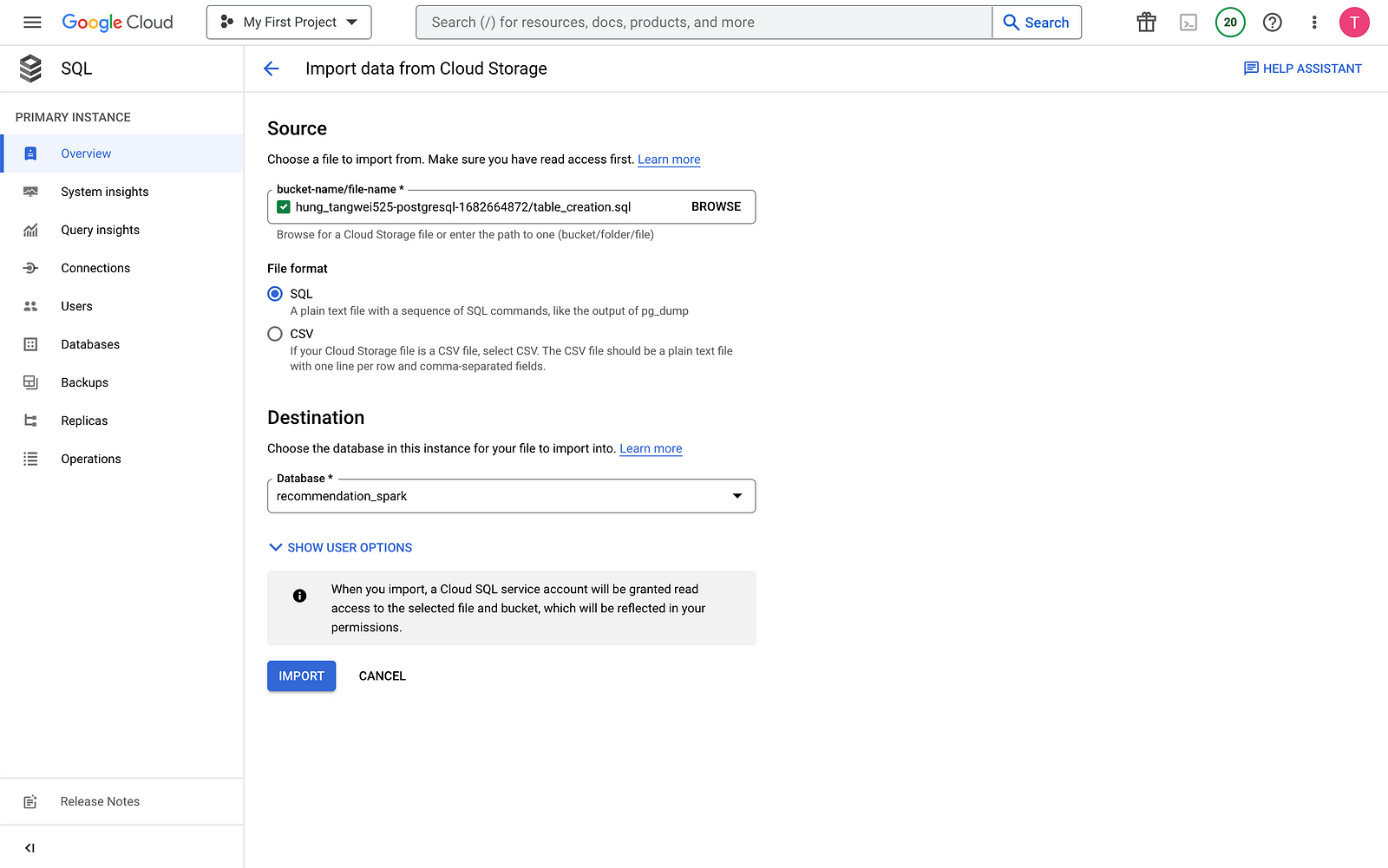

2. Create a sample database

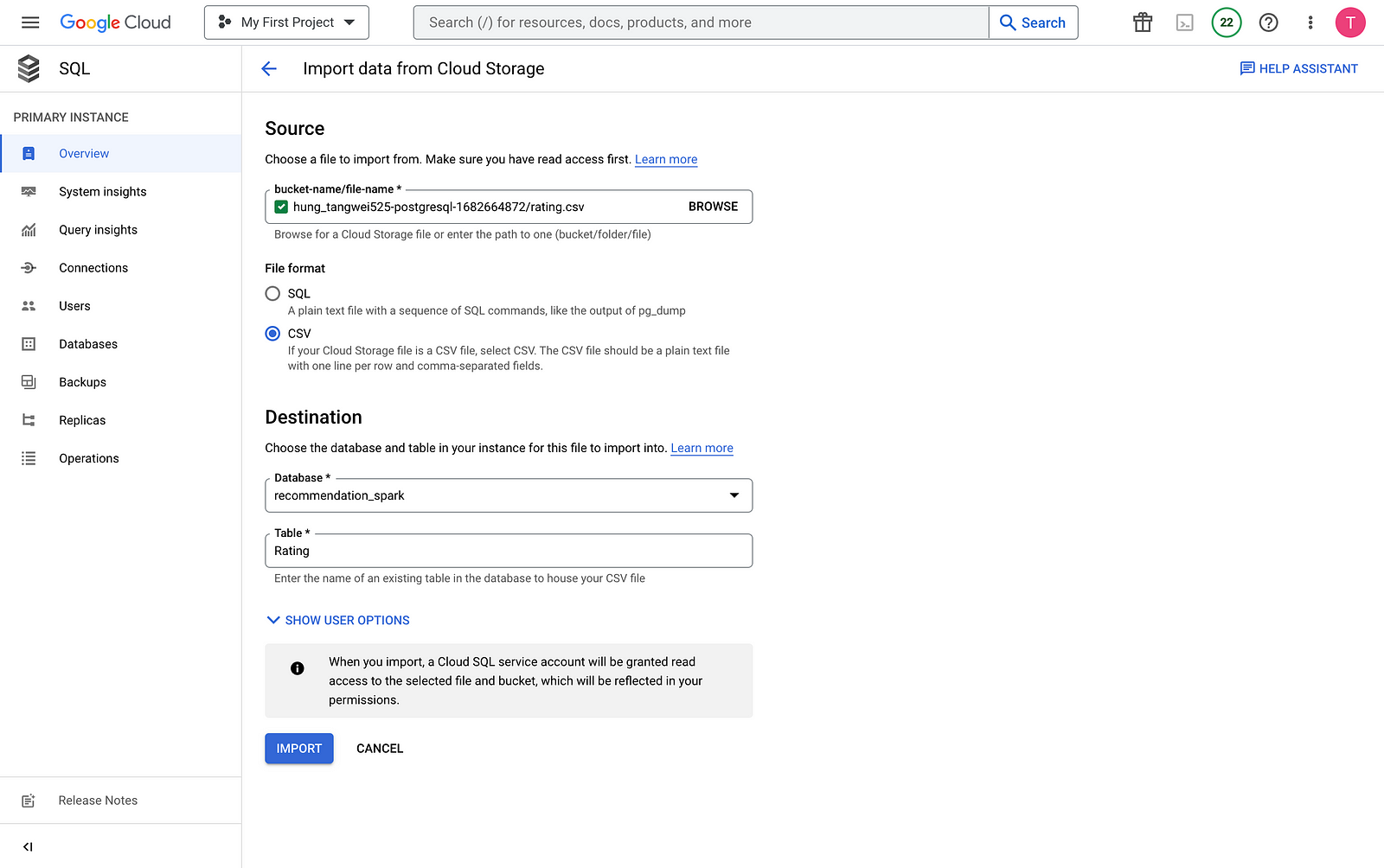

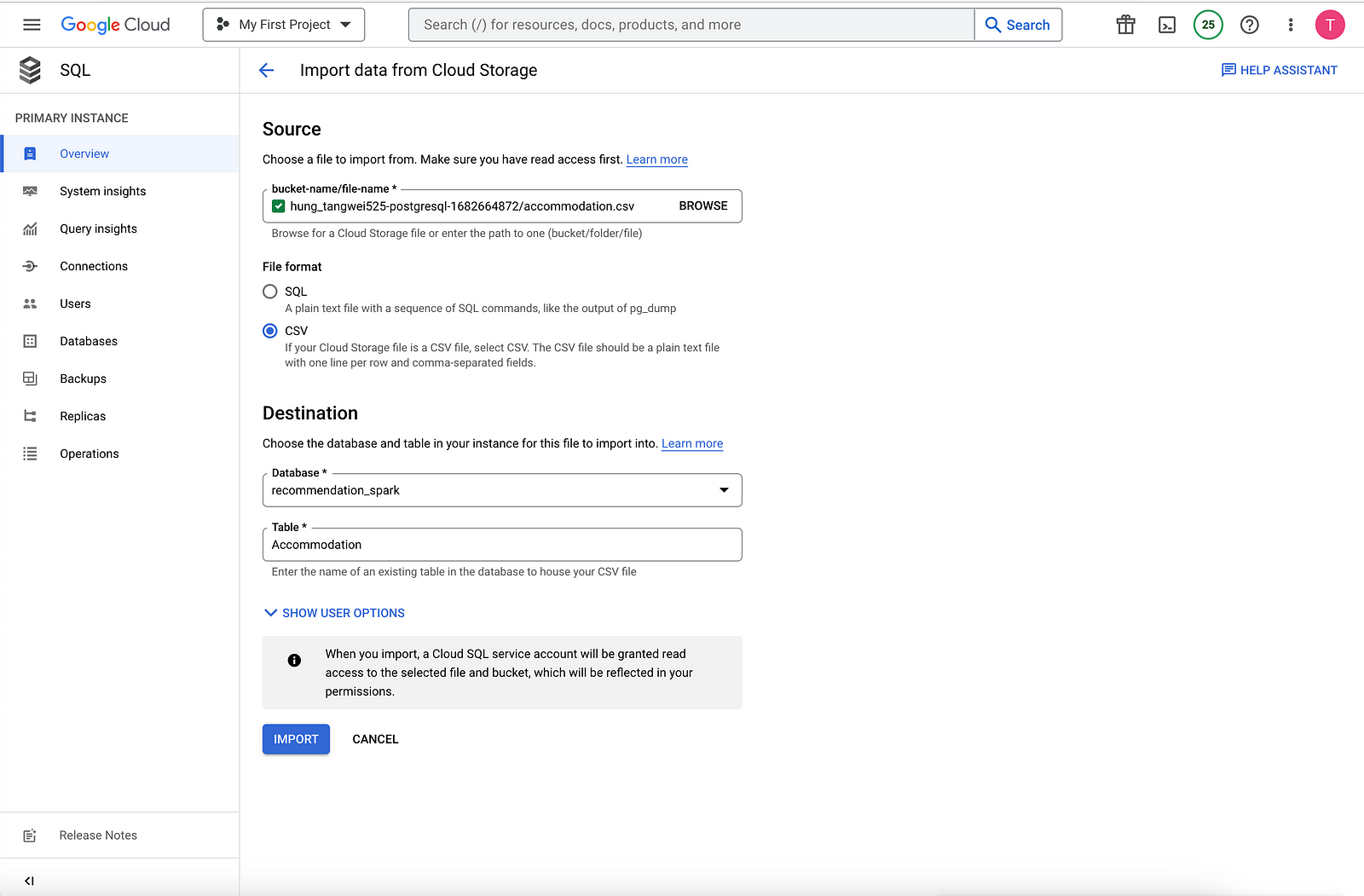

3. Populate the database

Create a topic, a function, and a scheduler job

In this step, create a custom IAM service account and bind it to the custom SQL role that you created in Set up environment part. Then create a Pub/Sub topic and a Cloud Function that subscribes to the topic, and uses the Cloud SQL Admin API to initiate a backup. Finally, create a Cloud Scheduler job to post a message to the Pub/Sub topic periodically.

在這個步驟中,請建立一個自訂的 IAM 服務帳戶並將其綁定到您在「設置環境」部分創建的自訂 SQL 角色。然後創建一個 Pub/Sub 主題和一個 Cloud Function 訂閱該主題,並使用 Cloud SQL 管理 API 啟動備份。最後,創建一個 Cloud Scheduler 工作以定期發布消息到 Pub/Sub 主題。

Create a service account for the Cloud Function

為 Cloud Function 建立服務帳戶

Create a custom service account and bind it to the custom SQL role that you created in Set up environment.

建立一個自訂服務帳戶,並將其綁定到您在「設置環境」中建立的自訂 SQL 角色。

- Create an IAM service account to be used by the Cloud Function

gcloud iam service-accounts create ${GCF_NAME} \

--display-name "Service Account for GCF and SQL Admin API"2. Grant the Cloud Function service account access to the custom SQL role

gcloud projects add-iam-policy-binding ${PROJECT_ID} \

--member="serviceAccount:${GCF_NAME}@${PROJECT_ID}.iam.gserviceaccount.com" \

--role="projects/${PROJECT_ID}/roles/${SQL_ROLE}"

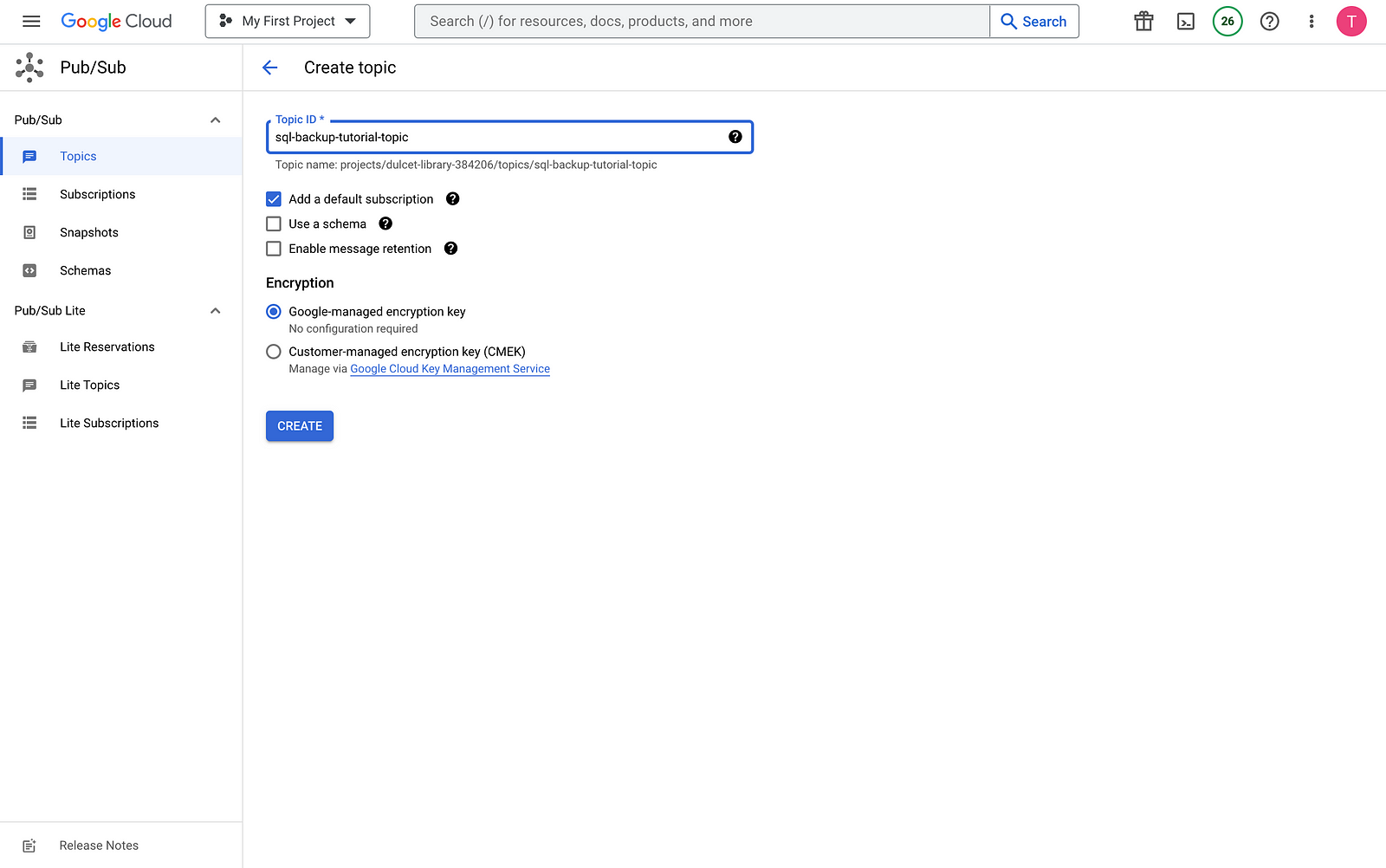

Create a Pub/Sub topic

建立一個 Pub/Sub 主題

Create a Pub/Sub topic that’s used to trigger the Cloud Function that interacts with the CloudSQL database.

建立一個 Pub/Sub 主題,用於觸發與 CloudSQL 資料庫互動的 Cloud Function。

GCP Cloud Shell:

gcloud pubsub topics create ${PUBSUB_TOPIC}GCP Console:

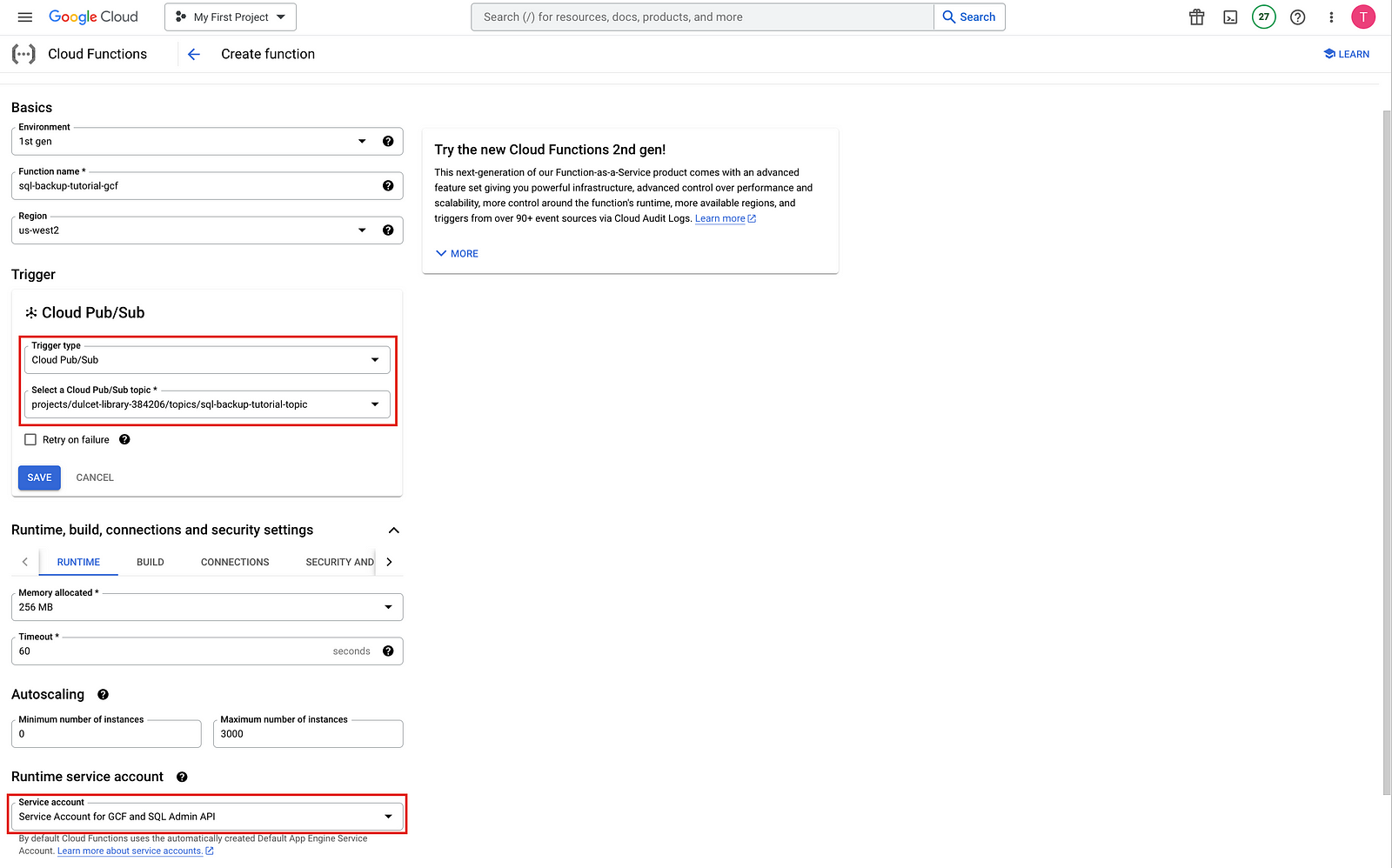

Create a Cloud Function

建立一個Cloud Function

Cloud Shell:

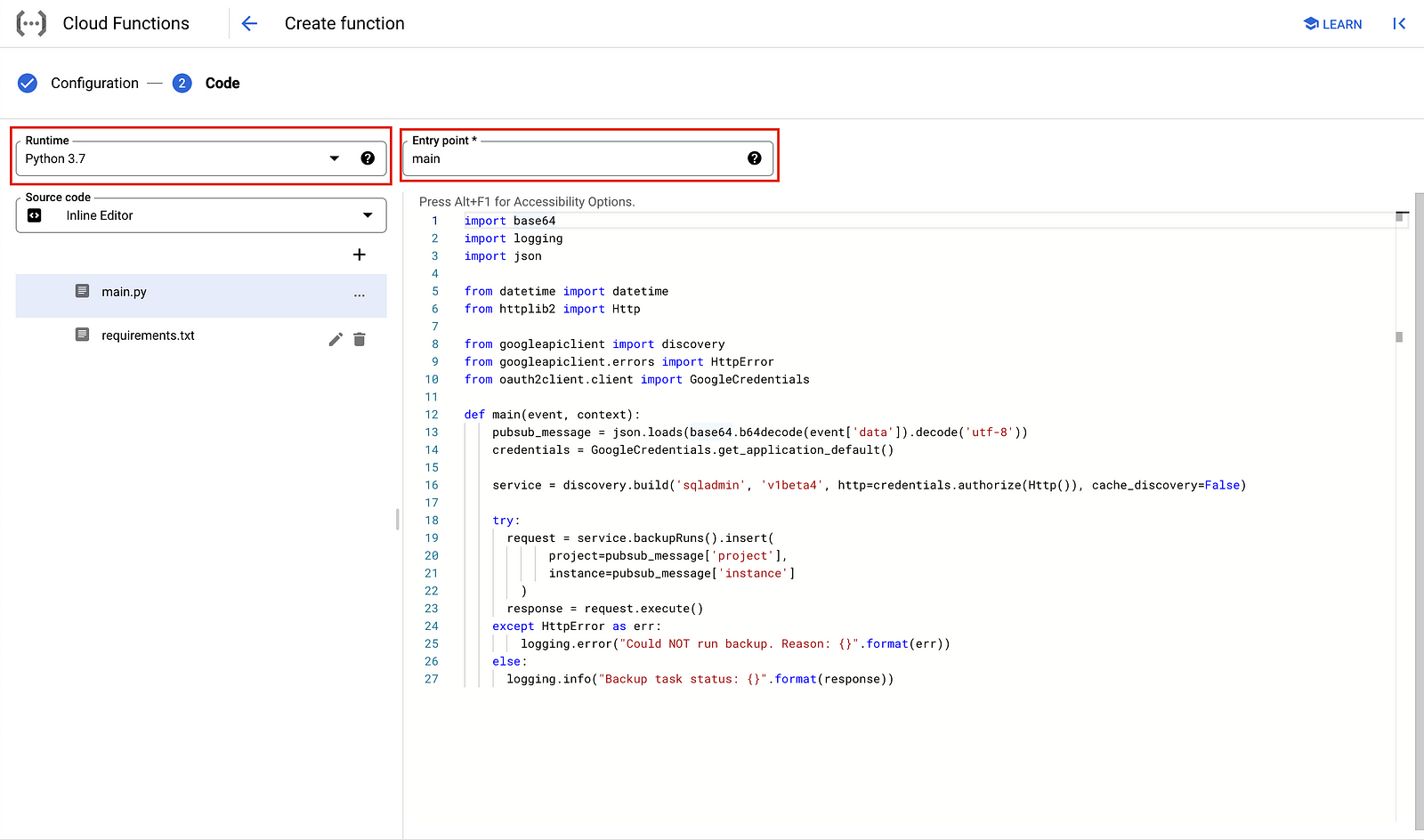

- Create a

main.pyfile by pasting the following into Cloud Shell

cat <<EOF > main.py

import base64

import logging

import json

from datetime import datetime

from httplib2 import Http

from googleapiclient import discovery

from googleapiclient.errors import HttpError

from oauth2client.client import GoogleCredentials

def main(event, context):

pubsub_message = json.loads(base64.b64decode(event['data']).decode('utf-8'))

credentials = GoogleCredentials.get_application_default()

service = discovery.build('sqladmin', 'v1beta4', http=credentials.authorize(Http()), cache_discovery=False)

try:

request = service.backupRuns().insert(

project=pubsub_message['project'],

instance=pubsub_message['instance']

)

response = request.execute()

except HttpError as err:

logging.error("Could NOT run backup. Reason: {}".format(err))

else:

logging.info("Backup task status: {}".format(response))

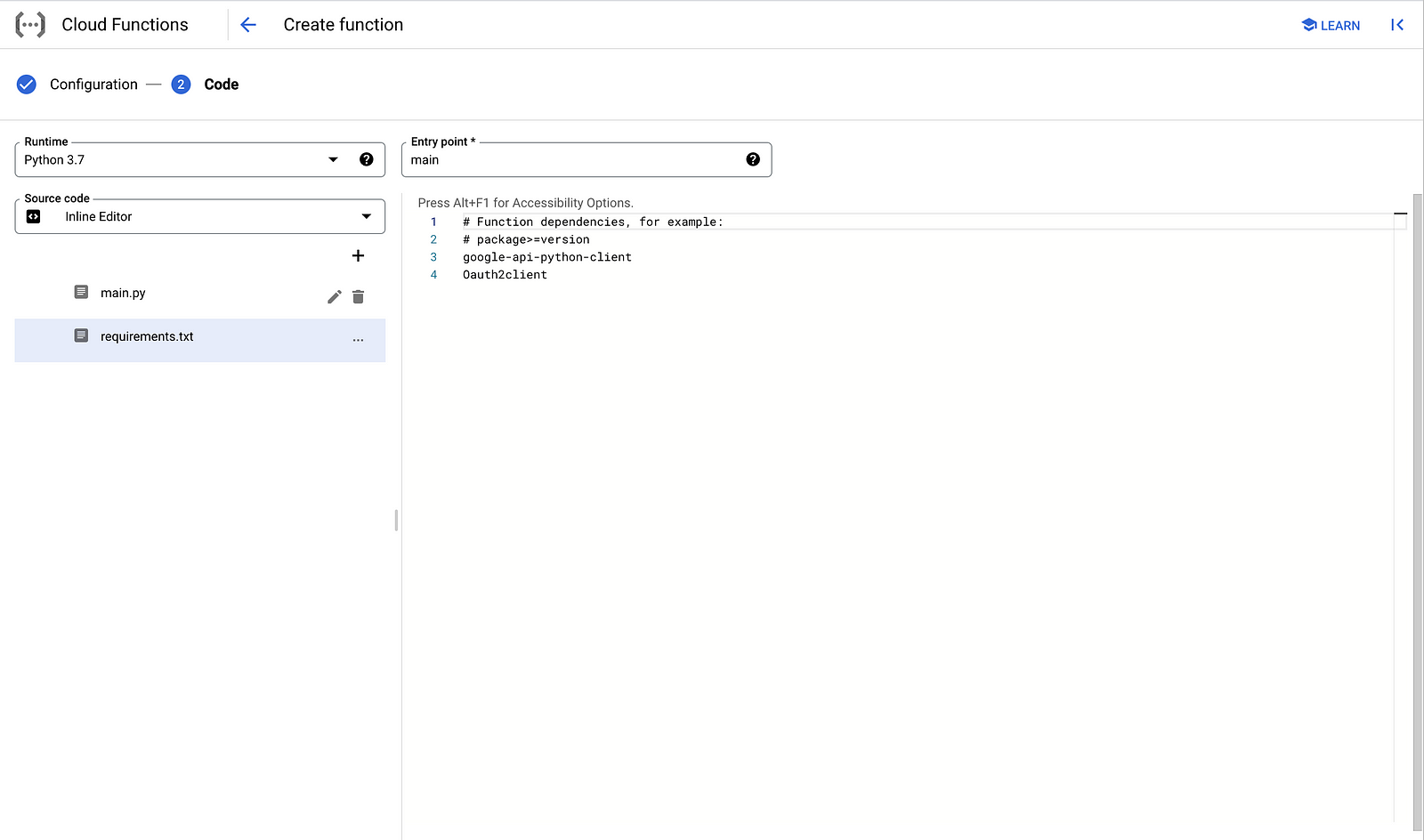

EOF2. Create a requirements.txt file by pasting the following into Cloud Shell

cat <<EOF > requirements.txt

google-api-python-client

Oauth2client

EOF3. Deploy the code

gcloud functions deploy ${GCF_NAME} \

--trigger-topic ${PUBSUB_TOPIC} \

--runtime python37 \

--entry-point main \

--service-account ${GCF_NAME}@${PROJECT_ID}.iam.gserviceaccount.comGCP Console:

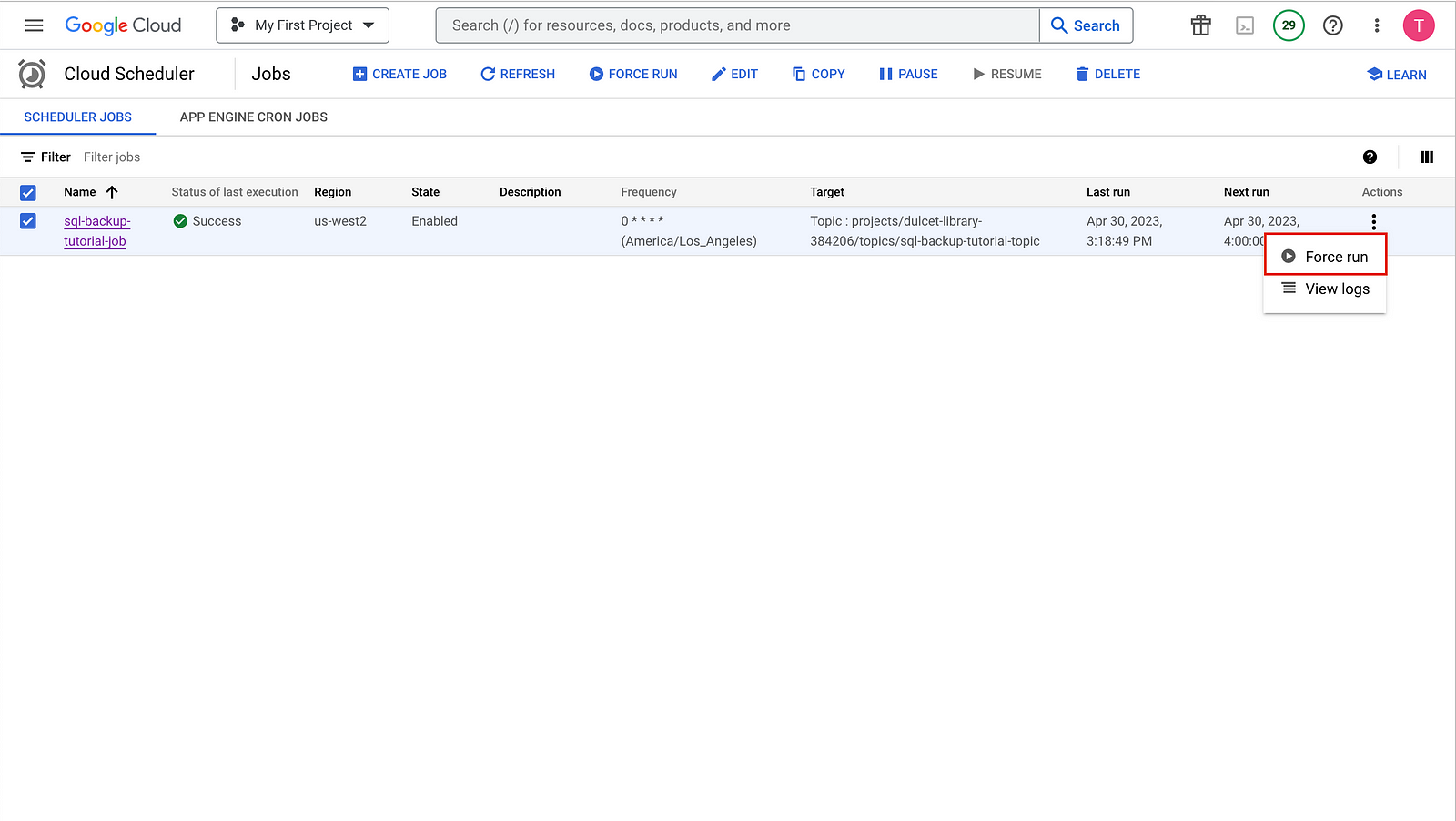

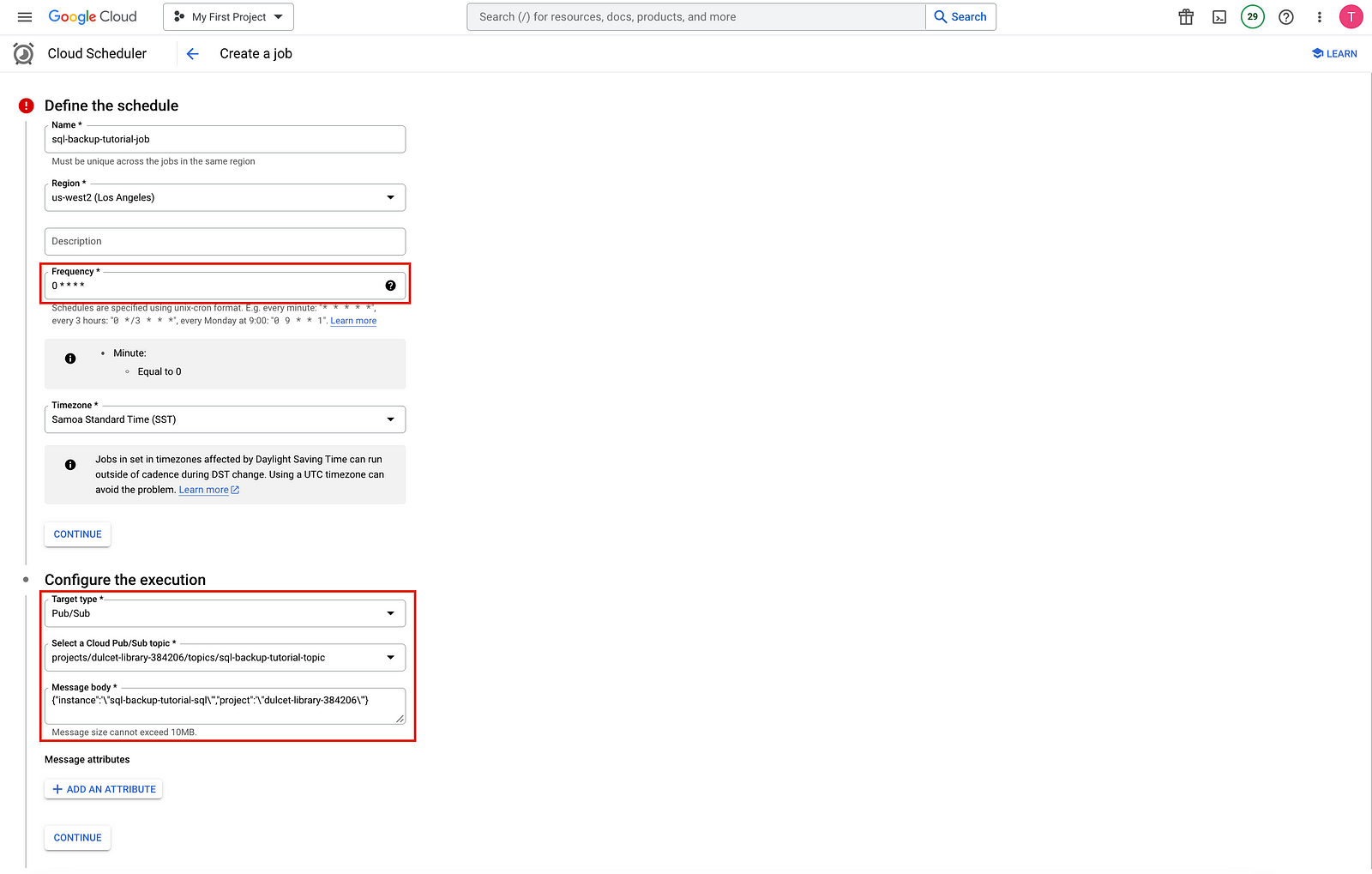

Create a Cloud Scheduler job

建立一個Cloud Scheduler任務

Finally, you create a Cloud Scheduler job to periodically trigger the data backup function on an hourly basis. Cloud Scheduler uses an App Engine instance for deployment.

最後,您可以建立一個 Cloud Scheduler 工作來定期觸發每小時的數據備份功能。Cloud Scheduler 使用 App Engine 實例進行部署。

Cloud Shell:

- Create an APP Engine instance for the Cloud Scheduler job

gcloud app create --region=${REGION}2. Create a Cloud Scheduler job

gcloud scheduler jobs create pubsub ${SCHEDULER_JOB} \

--schedule "0 * * * *" \

--topic ${PUBSUB_TOPIC} \

--message-body '{"instance":'\"${SQL_INSTANCE}\"',"project":'\"${PROJECT_ID}\"'}' \

--time-zone 'America/Los_Angeles'GCP Console:

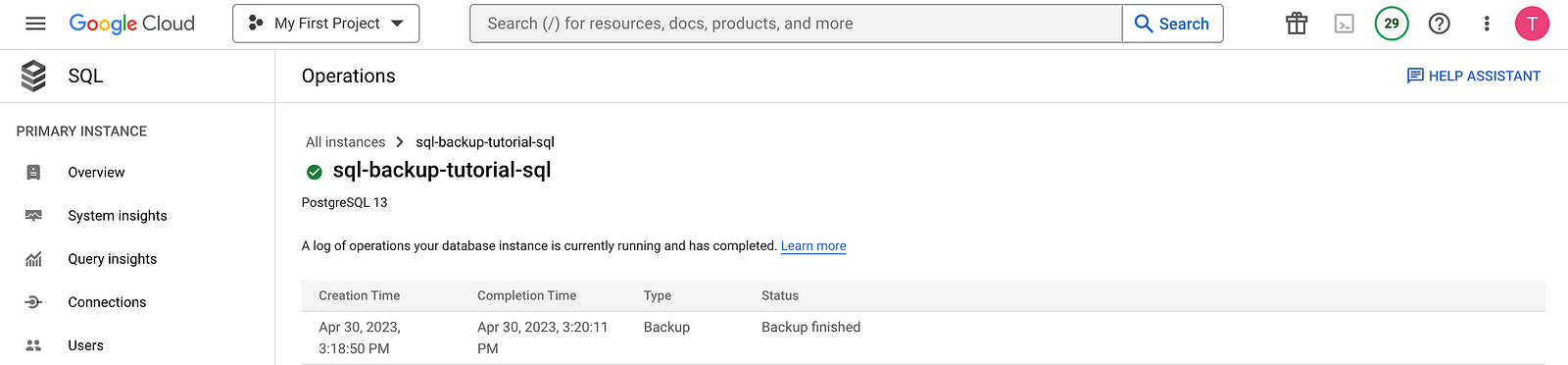

Test your solution

This step is to test your solution. Start by running the Cloud Scheduler job.

Cloud Shell:

- Run the Cloud Scheduler job manually to trigger a PostgreSQL dump of your database.

gcloud scheduler jobs run ${SCHEDULER_JOB}2. List the operations performed on the PostgreSQL instance, and verify that there’s an operation of type BACKUP_VOLUME

gcloud sql operations list --instance ${SQL_INSTANCE} --limit 1GCP Console: